Happy Birthday, CDP Public Cloud

Cloudera

OCTOBER 13, 2020

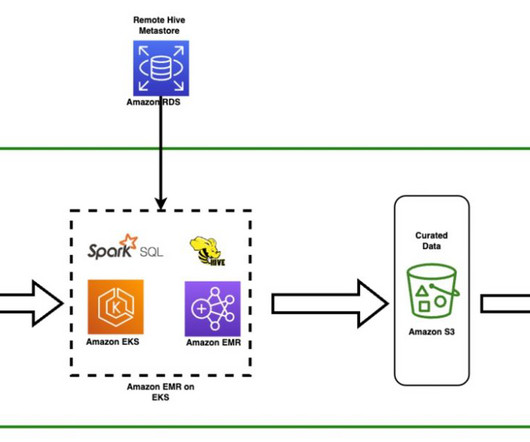

In the beginning, CDP ran only on AWS with a set of services that supported a handful of use cases and workload types: CDP Data Warehouse: a kubernetes-based service that allows business analysts to deploy data warehouses with secure, self-service access to enterprise data. That Was Then. New Services.

Let's personalize your content