SAP Datasphere Powers Business at the Speed of Data

Rocket-Powered Data Science

MARCH 20, 2023

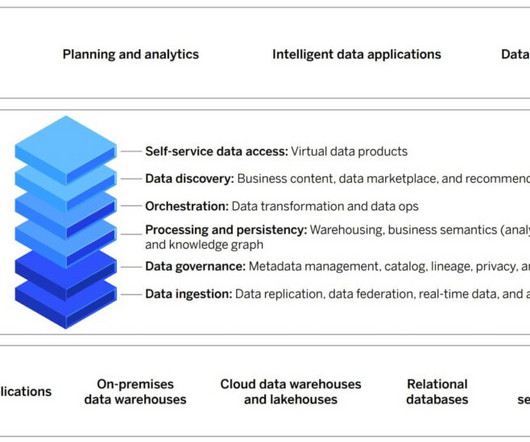

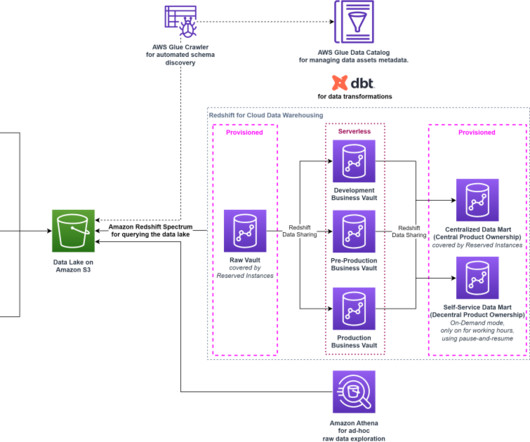

Datasphere goes beyond the “big three” data usage end-user requirements (ease of discovery, access, and delivery) to include data orchestration (data ops and data transformations) and business data contextualization (semantics, metadata, catalog services).

Let's personalize your content