Modernize a legacy real-time analytics application with Amazon Managed Service for Apache Flink

AWS Big Data

OCTOBER 11, 2023

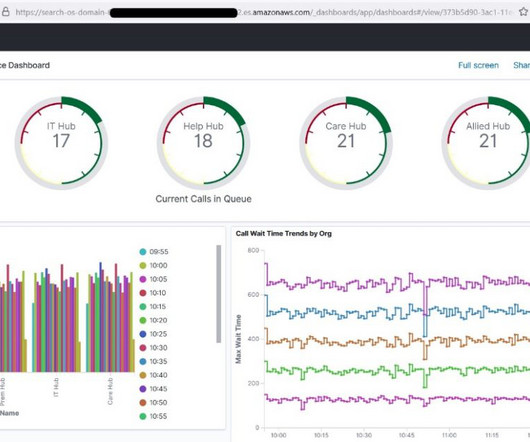

Traditionally, such a legacy call center analytics platform would be built on a relational database that stores data from streaming sources. Data transformations through stored procedures and use of materialized views to curate datasets and generate insights is a known pattern with relational databases.

Let's personalize your content