From Blob Storage to SQL Database Using Azure Data Factory

Analytics Vidhya

APRIL 29, 2022

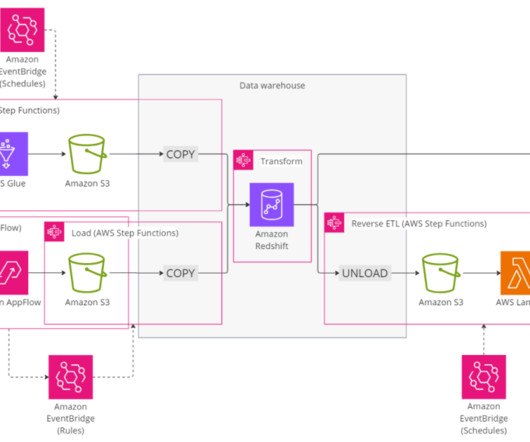

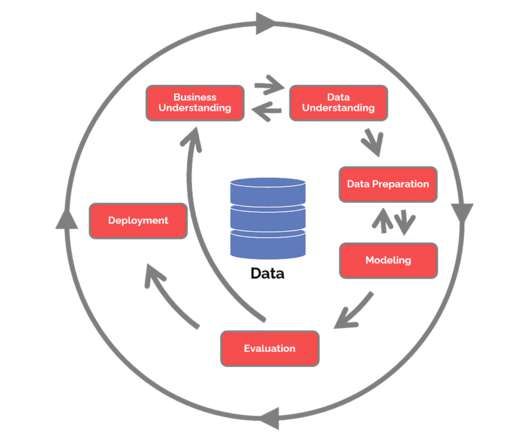

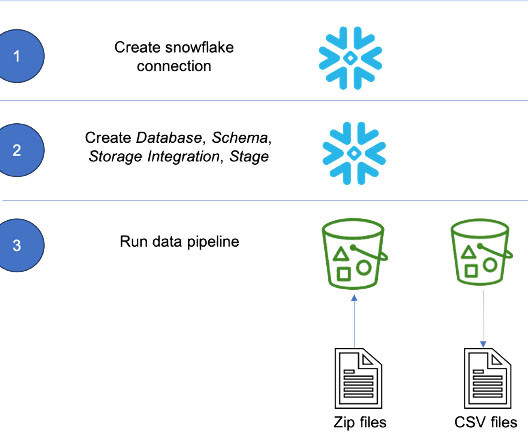

This article was published as a part of the Data Science Blogathon. Introduction Azure data factory (ADF) is a cloud-based ETL (Extract, Transform, Load) tool and data integration service which allows you to create a data-driven workflow. In this article, I’ll show […].

Let's personalize your content