Business Strategies for Deploying Disruptive Tech: Generative AI and ChatGPT

Rocket-Powered Data Science

FEBRUARY 15, 2023

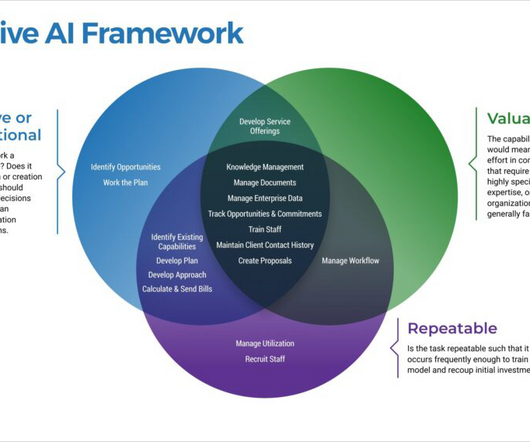

Since ChatGPT is built from large language models that are trained against massive data sets (mostly business documents, internal text repositories, and similar resources) within your organization, consequently attention must be given to the stability, accessibility, and reliability of those resources. Test early and often.

Let's personalize your content