Beyond “Prompt and Pray”

O'Reilly on Data

JANUARY 21, 2025

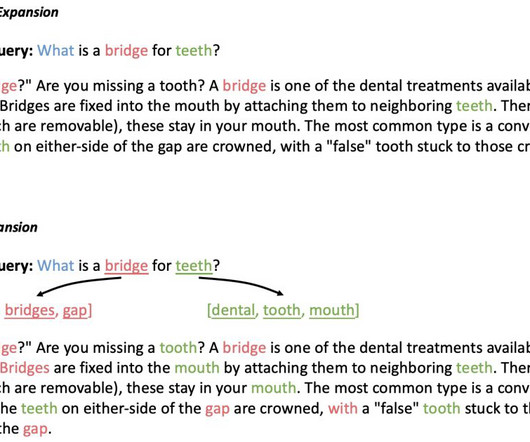

Your companys AI assistant confidently tells a customer its processed their urgent withdrawal requestexcept it hasnt, because it misinterpreted the API documentation. These are systems that engage in conversations and integrate with APIs but dont create stand-alone content like emails, presentations, or documents.

Let's personalize your content