Take manual snapshots and restore in a different domain spanning across various Regions and accounts in Amazon OpenSearch Service

AWS Big Data

OCTOBER 11, 2024

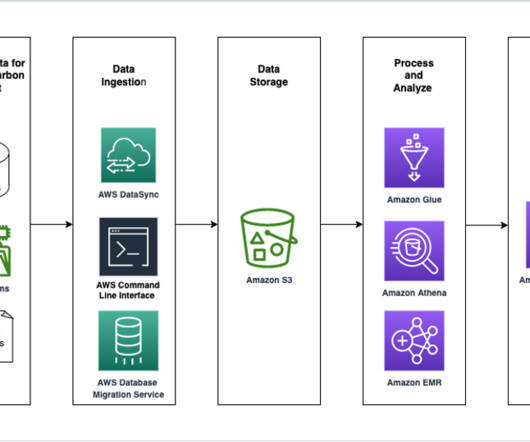

Snapshots are crucial for data backup and disaster recovery in Amazon OpenSearch Service. These snapshots allow you to generate backups of your domain indexes and cluster state at specific moments and save them in a reliable storage location such as Amazon Simple Storage Service (Amazon S3). Snapshots are not instantaneous.

Let's personalize your content