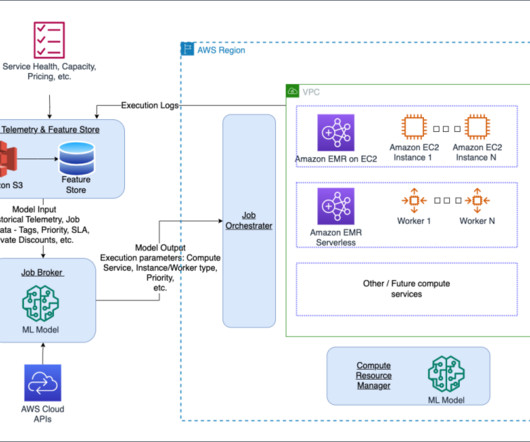

MCP, ACP, and Agent2Agent set standards for scalable AI results

CIO Business Intelligence

MAY 22, 2025

Open protocols aimed at standardizing how AI systems connect, communicate, and absorb context are providing much needed maturity to an AI market that sees IT leaders anxious to pivot from experimentation to practical solutions. I affectionately refer to MCP as the plumbing stack. It also helps them to avoid vendor lock-in, he adds.

Let's personalize your content