9 IT resolutions for 2025

CIO Business Intelligence

JANUARY 6, 2025

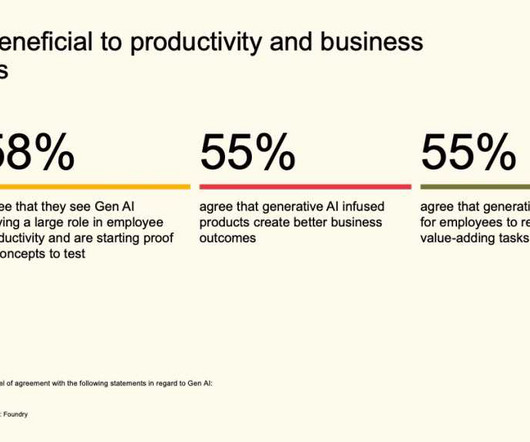

Balancing the rollout with proper training, adoption, and careful measurement of costs and benefits is essential, particularly while securing company assets in tandem, says Ted Kenney, CIO of tech company Access. Our success will be measured by user adoption, a reduction in manual tasks, and an increase in sales and customer satisfaction.

Let's personalize your content