It’s 2025. Are your data strategies strong enough to de-risk AI adoption?

CIO Business Intelligence

DECEMBER 11, 2024

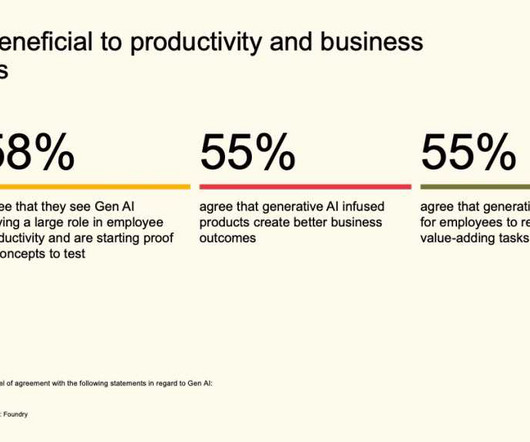

If 2023 was the year of AI discovery and 2024 was that of AI experimentation, then 2025 will be the year that organisations seek to maximise AI-driven efficiencies and leverage AI for competitive advantage. Lack of oversight establishes a different kind of risk, with shadow IT posing significant security threats to organisations.

Let's personalize your content