Advancing Automatic Knowledge Extraction with PubMiner AI

Ontotext

NOVEMBER 22, 2024

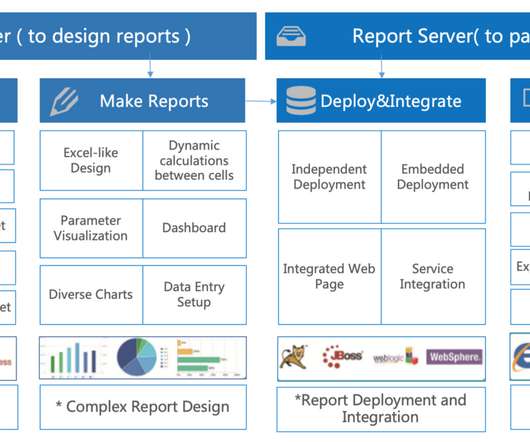

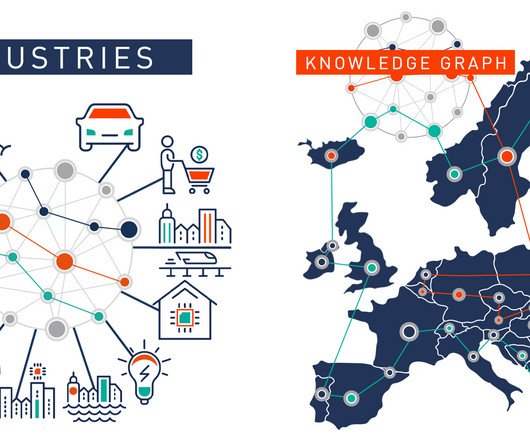

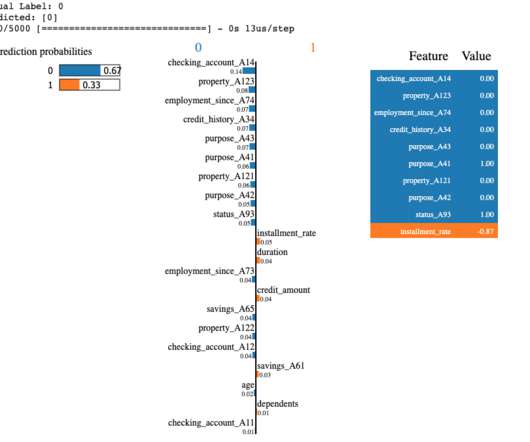

Finally, it enables building a subgraph representing the extracted knowledge, normalized to reference data sets. It offers a comprehensive suite of features designed to streamline research and discovery. Automated Report Generation : Summarizes research findings and trends into comprehensive, digestible reports.

Let's personalize your content