Enriching metadata for accurate text-to-SQL generation for Amazon Athena

AWS Big Data

OCTOBER 14, 2024

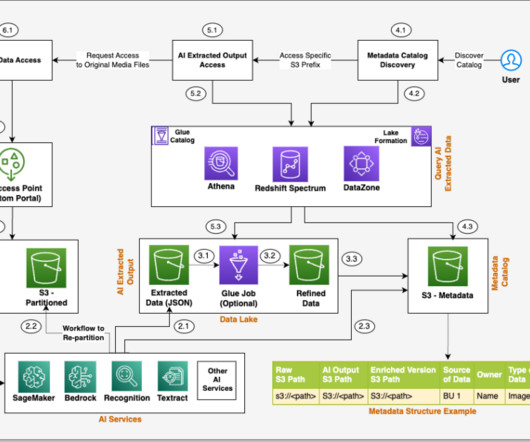

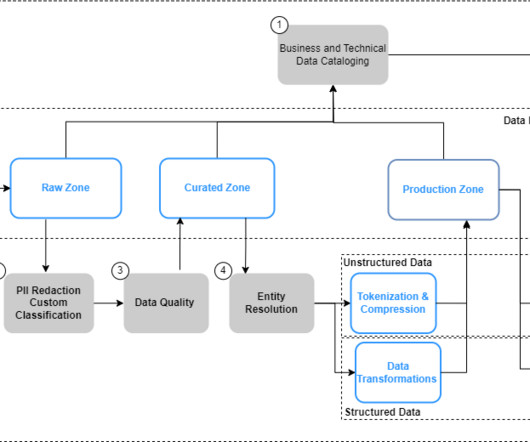

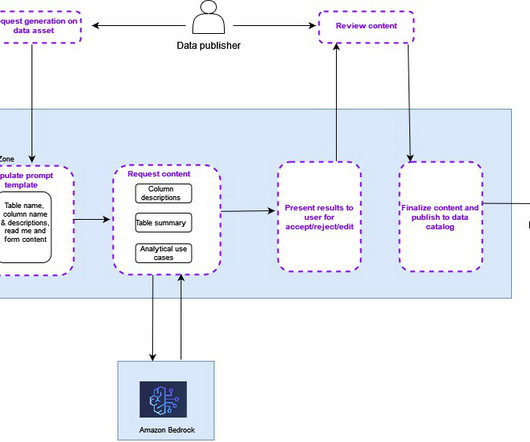

Amazon Athena provides interactive analytics service for analyzing the data in Amazon Simple Storage Service (Amazon S3). Amazon Redshift is used to analyze structured and semi-structured data across data warehouses, operational databases, and data lakes. Table metadata is fetched from AWS Glue.

Let's personalize your content