Measuring Models' Uncertainty: Conformal Prediction

Dataiku

OCTOBER 16, 2020

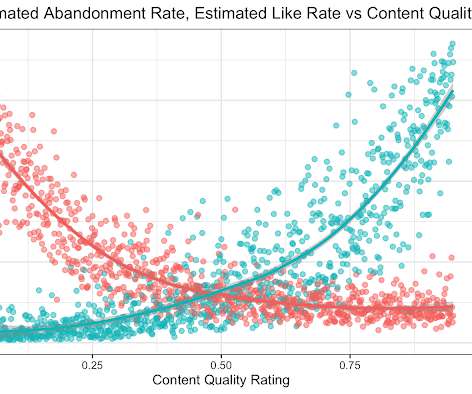

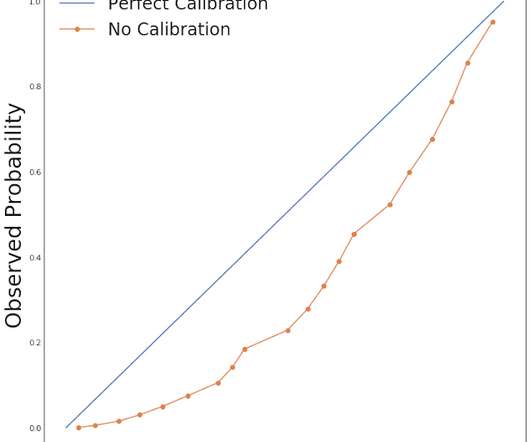

For designing machine learning (ML) models as well as for monitoring them in production, uncertainty estimation on predictions is a critical asset. It helps identify suspicious samples during model training in addition to detecting out-of-distribution samples at inference time.

Let's personalize your content