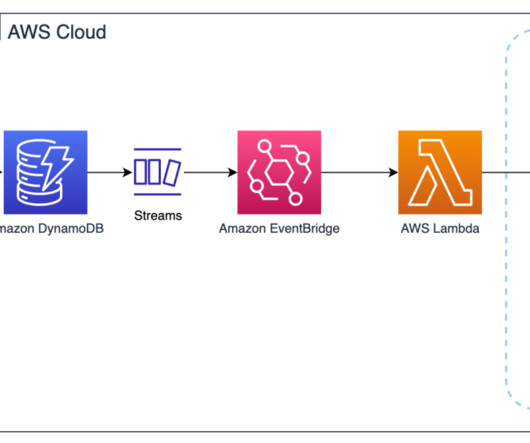

Run Apache XTable in AWS Lambda for background conversion of open table formats

AWS Big Data

NOVEMBER 26, 2024

This post was co-written with Dipankar Mazumdar, Staff Data Engineering Advocate with AWS Partner OneHouse. Data architecture has evolved significantly to handle growing data volumes and diverse workloads. The synchronization process in XTable works by translating table metadata using the existing APIs of these table formats.

Let's personalize your content