How to Implement Data Engineering in Practice?

Analytics Vidhya

DECEMBER 1, 2021

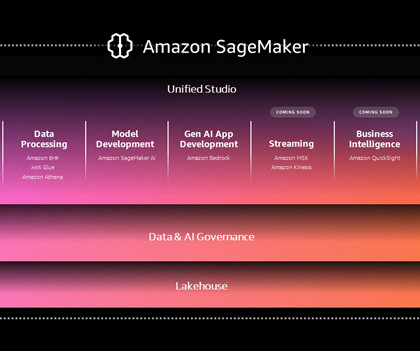

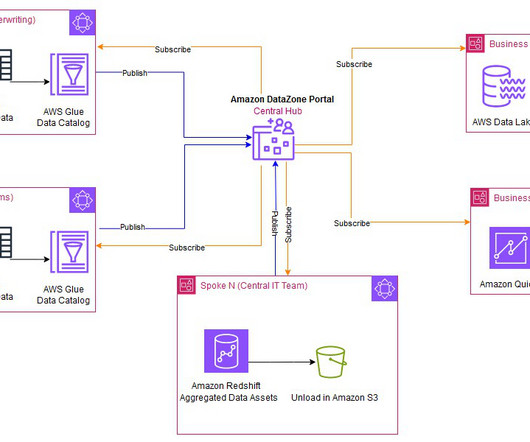

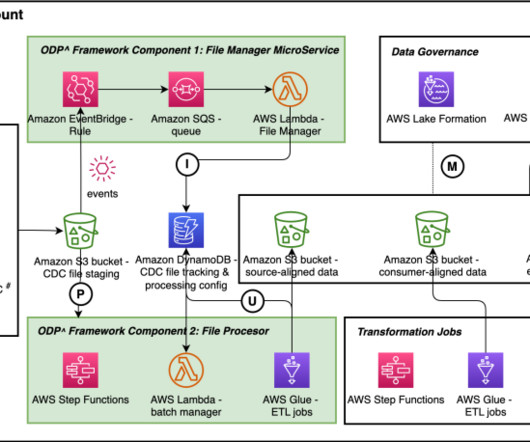

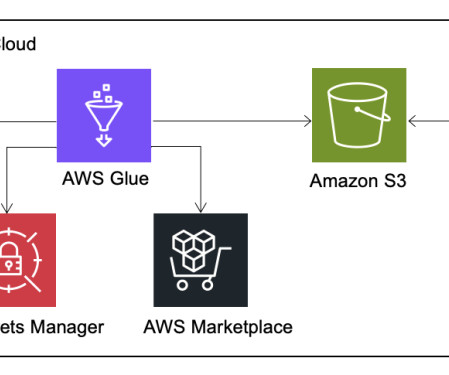

Components of Data Engineering Object Storage Object Storage MinIO Install Object Storage MinIO Data Lake with Buckets Demo Data Lake Management Conclusion References What is Data Engineering? The post How to Implement Data Engineering in Practice? appeared first on Analytics Vidhya.

Let's personalize your content